Large-Scale Computations, Data, and Analytics

Large-scale computations, data, and analytics play a significant role in the research mission of the Daniel Guggenheim School of Aerospace Engineering, where faculty have conducted simulations reaching a size corresponding to more than 6 trillion grid points using Summit, located at the Oak Ridge National Laboratory.

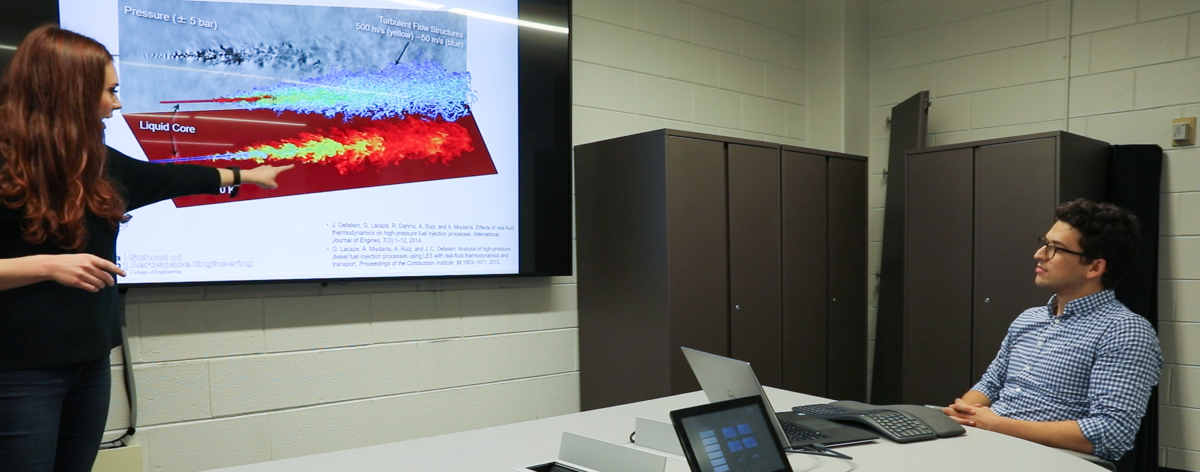

Faculty make ample use of the School's computing capacity by conducting simulations of fluid turbulence that both advance scientific understanding and facilitate progress in theory and modeling in ways that are otherwise impossible. Substantial advances have been made in algorithm development for highly efficient parallel execution on heterogeneous GPU platforms which are increasingly important in the high-performance computing landscape that is moving towards Exascale. These very large computations are allowing our researchers to examine the fine details of so-called extreme events in turbulence, where specific quantities may take instantaneous values as large as over a thousand times the average value and thus call for advanced modeling or prediction techniques. Analyses of Petabyte-sized data collections are an arduous endeavor, as well.

Associated AE Labs

Associated AE Disciplines

(text and background only visible when logged in)